Mostly Right

Here's a short anecdote from my work with LLMs:

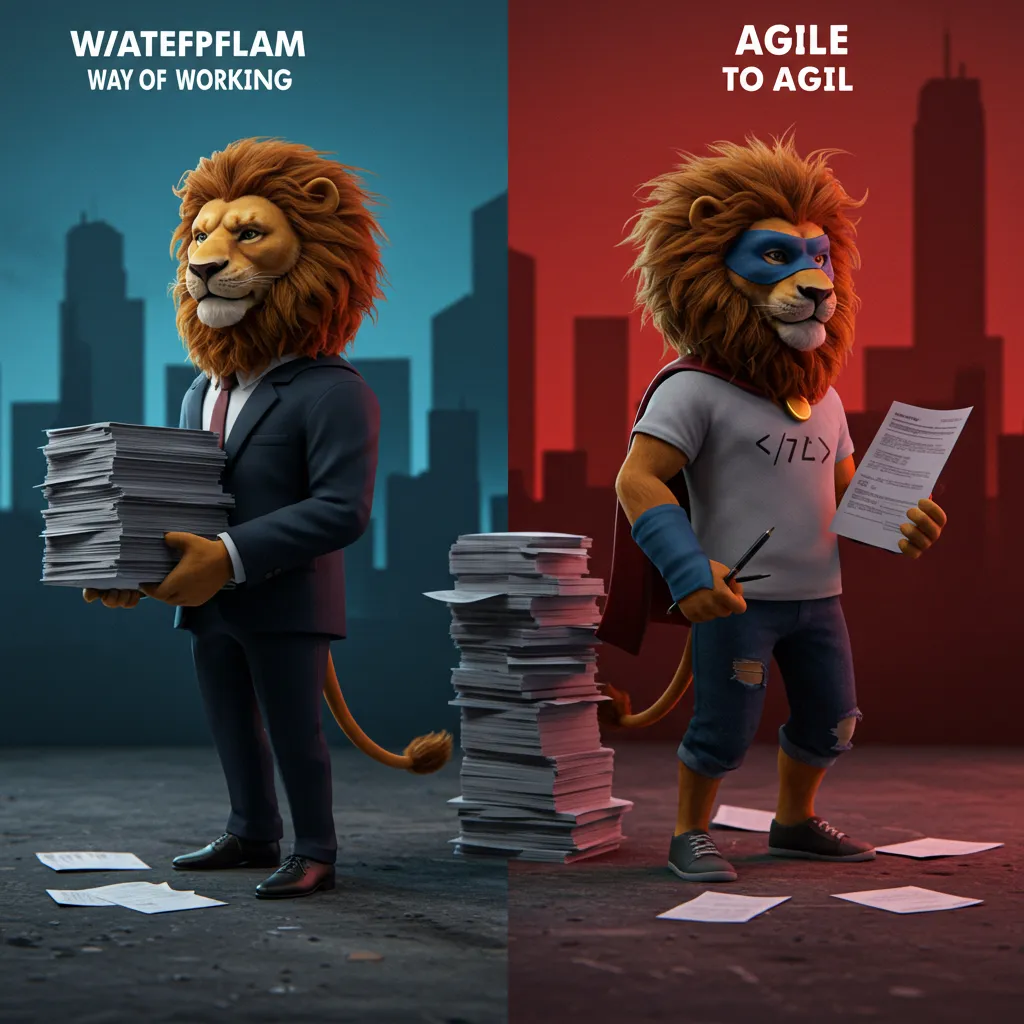

Recently I've been involved a lot in compliance topics at work. Part of this involves presenting certain compliance topics to others, and given the dry nature of the topic, I decided to invent a few super-hero lions to show up a couple of time on the slides: the Comp-Lions.[1]

So I used an LLM to create some pictures. Here's one that ultimately didn't make it into the presentation.[2]

Anyways, I know the limitations of LLMS when it comes to the correct amount of fingers or toes, so I counted each claw on each paw for each lion. And in doing so, I had several moments of extreme clarity, as if I was floating above me, looking down on me as I count these claws, pondering about the fundamental life choices that lead me up to this point.

Anyways, all claws on all paws on all lions were as expected, so I held the presentation with confidence and was silently high-fiving myself for a job well-done.

And then, almost immediately after the presentation, the feedback came in about the lions. No, not about the claws. Turns out some of the lions had five legs, and while I was counting all claws on each paw of all 5 legs per lion I didn't even notice that something else was amiss.

Oh well.[3]